In Archlinux, the initial ramdisk which contains kernel modules needed for booting the system is generated by a bash script named mkinitcpio. In /etc/mkinitcpio.conf you specify which kernel modules you need at boot and then you build the initrd image (which Archlinux calls initramfs-linux.img) with mkinitcpio -p linux

mkinitcpio will work OOTB for most standard configurations, i.e. root partition in a regular, non-LVM partition, no encrypted partitions, etc. But in my case, I use LVM2 partitions for my root, home, var and other system partitions with only /boot being a regular partition. In addition, these LVM2 partitions are stored inside of a LUKS encrypted container. The initramfs-linux.img created by default mkinitcpio settings cannot handle such things as encrypted partitions and root partitions located inside LVM2 partitions, so you must customize mkinitcpio.conf accordingly.

mkinitcpio.conf settings for root partition located inside of LVM2 in LUKS (using sd-encrypt hook)

Inside /etc/mkinitcpio.conf locate the line starting with HOOKS=

The default settings as of Dec. 31, 2016 should look something like:

HOOKS="base udev autodetect modconf block filesystems keyboard fsck"

You should edit this line so it looks like:

HOOKS="base systemd sd-encrypt autodetect modconf block sd-lvm2 filesystems keyboard fsck"

Instead of the udev module, you will use systemd, and LUKS partitions will be handled by systemd's sd-encrypt module. Also notice there is a LVM2 kernel module present, but this module is the systemd version sd-lvm2.

Once you have edited mkinitcpio.conf, generate your initial ramdisk with mkinitcpio -p linux

The next step is to add kernel boot options to GRUB (for BIOS machines) or systemd-bootctl (for UEFI machines).

Kernel boot options for sd-encrypt and sd-lvm2 in /etc/default/grub (BIOS)

If you have an older computer that doesn't have UEFI firmware but uses BIOS, Archlinux uses GRUB to boot your system. You have to edit /etc/default/grub with the appropriate options for sd-encrypt and then regenerate your grub configuration in /boot/grub/grub.cfg

Find the line GRUB_CMDLINE_LINUX_DEFAULT= and edit it as follows:

GRUB_CMDLINE_LINUX_DEFAULT="luks.uuid=72369889-3971-4039-ab17-f4db45aeeef2 root=UUID=f4dbfa30-35ba-4d5e-8d43-a7b29652f575 rw"

Note that the luks.uuid is the UUID for your LUKS encrypted partition BEFORE it has been opened with cryptsetup open

You can find the UUID for all partitions with blkid

root=UUID= refers to the UUID of your root partition which resides inside of a LVM partition which itself is inside of a LUKS encrypted container.

If you haven't written the GRUB bootloader to your disk yet, you would then execute:

grub-install --recheck /dev/sda

(assuming sda is the HDD from which you will boot)

And then (re)generate your grub.cfg:

grub-mkconfig -o /boot/grub/grub.cfg

Kernel boot options for sd-encrypt and sd-lvm2 in /boot/loader/entries/arch.conf

Archlinux uses systemd-bootctl as the bootloader for newer machines with UEFI firmware. The main boot menu is located in /boot/loader/loader.conf which looks like:

[archjun@gl553rog loader]$ cat loader.conf

timeout 5

default arch

This particular menu contains only a single entry, but you can add entries for other Linux distros (systemd-bootctl can chainload to GRUB) and OS'es like Windows.

The OS-specific config files are stored under /boot/loader/entries/ and my arch-specific config is in /boot/loader/entries/arch.conf

title Archlinux

linux /vmlinuz-linux

initrd /intel-ucode.img

initrd /initramfs-linux.img

options luks.uuid=ba04cb93-328c-4f9a-a2a9-4a86cee0f592 luks.name=UUID=ba04cb93-328c-4f9a-a2a9-4a86cee0f592=luks root=/dev/mapper/ARCH-rootvol rw

options acpi_osi=! acpi_osi="Windows 2009"

The kernel boot options appear on the line starting with the keyword options

For luks.uuid you need to specify the UUID of your LUKS partition BEFORE it has been opened by cryptsetup. luks.name is not strictly necessary; it simply assigns an easy-to-read name to your LUKS partition. Finally, root specifies which partition your root partition is located on so that the kernel can proceed with booting. For the root parameter, you can specify the partition using /dev/mapper syntax or UUID. If you use UUID you must use the syntax root=UUID=...

After editing your arch.conf it is not necessary to rebuild any images or regenerate configs as is the case with GRUB.

Warning: Do not mix up the syntax for sd-encrypt and encrypt mkinitcpio HOOKS

Before systemd became popular, the initial ramdisk for Archlinux used busybox. When booting from an encrypted root and LVM2 with busybox, the mkinitcpio HOOKS used are encrypt and lvm2 (as opposed to sd-encrypt and sd-lvm2 for systemd)

An /etc/mkinitcpio.conf customized for LUKS and LVM2 (without systemd) would look like:

HOOKS="base udev encrypt autodetect modconf block lvm2 filesystems keyboard fsck"

Notice that the encrypt hook comes just after udev. If you were using systemd boot, however, udev would be replaced by systemd.

The related settings in /etc/default/grub (BIOS) or /boot/loader/entries/arch.conf (UEFI) would then look something like the following:

/etc/default/grub

GRUB_CMDLINE_LINUX_DEFAULT="cryptdevice=UUID=5a2527d8-8972-43f6-a32d-2c50f734f7a9:ARCH root=/dev/mapper/ARCH-rootvol"

/boot/loader/entries/arch.conf

title Arch

linux /vmlinuz-linux

initrd /intel-ucode.img

initrd /initramfs-linux.img

options cryptdevice=UUID=f121bc44-c8c2-4881-b9d5-da4d1b49f3e4:ARCH root=/dev/mapper/ARCH-rootvol rw

The keyword cryptdevice will only work with the encrypt module in your initial ramdisk. If you are using sd-encrypt, however, you must replace cryptdevice=UUID= with luks.uuid=

References:

https://wiki.archlinux.org/index.php/Dm-crypt/System_configuration#mkinitcpio

2016년 12월 31일 토요일

2016년 12월 17일 토요일

Mirroring PyPI locally

I recently had to set up a local PyPI (Python Package Index) mirror at a client site so that developers could download pip packages locally instead of from the Internet. I used bandersnatch to mirror the packages hosted on PyPI. Bandersnatch can be installed via pip and mirroring requires just two steps.

Customize /etc/bandersnatch.conf

The first time you run bandersnatch, /etc/bandersnatch.conf will be automatically generated:

[root@ospctrl1 Documents]# bandersnatch

2017-06-28 16:05:11,761 WARNING: Config file '/etc/bandersnatch.conf' missing, creating default config.

2017-06-28 16:05:11,761 WARNING: Please review the config file, then run 'bandersnatch' again.

In this file you will specify the path into which you will download PyPI packages. I set my download path as follows:

[mirror]

; The directory where the mirror data will be stored.

directory = /MIRROR/pypi

By default, a logfile is specified, but bandersnatch will crash with the error ConfigParser.NoSectionError: No section: 'formatters' if the logfile does not exist. I simply commented out the line to fix this problem:

;log-config = /etc/bandersnatch-log.conf

You can see a sample bandersnatch.conf file at this pastebin:

http://paste.openstack.org/show/586323/

Start the mirroring process

You can start mirroring PyPI with the following command:

sudo bandersnatch mirror

As of Oct. 2016, PyPI takes up 380 GB on disk. Although mirroring requires just two steps, you are not done yet. You still have to make the packages available over your network and you must setup pip clients to use your local mirror.

Make the local mirror available over the network

Once you have mirrored pip packages, the next step is to setup an NFS share to the path where PyPI packages are mirrored or serve the mirror over HTTP using a webserver like Apache, nginx, darkhttpd, etc. I chose to go with darkhttpd because it doesn't require any setup whatsoever. You simply invoke darkhttpd in the path you wish to share over http. If you invoke it as sudo, it will serve files over port 80, but if you invoke it as the regular user, it will serve files over port 8080. You can specify the TCP port used with the --port option.

sudo darkhttpd /MIRROR

Now the Document Root for the webserver is /MIRROR under which the pypi folder is located. I generally place all my local mirrors (i.e. Ubuntu 16.04 repo, CentOS 7 repo, RHEL repo, etc) under the Document Root so that they are all available over http.

Assuming your local mirror machine has the IP address 10.10.10.5, if you navigate to http://10.10.10.5 in your browser (or with cURL) you should be able to see the pypi folder.

Customize pip clients to use local PyPI mirror

On client machines, create the directory ~/.pip and then create ~/.pip/pip.conf (if it doesn't exist already). Now add the following:

[global]

index-url = http://10.10.10.5/pypi/web/simple

trusted-host = 10.10.10.5

This of course assumes that clients are on the 10.10.10.x subnet and that your PyPI mirror is located on 10.10.10.5

In addition, the web server's document root should be /MIRROR as detailed in the previous section.

Customize /etc/bandersnatch.conf

The first time you run bandersnatch, /etc/bandersnatch.conf will be automatically generated:

[root@ospctrl1 Documents]# bandersnatch

2017-06-28 16:05:11,761 WARNING: Config file '/etc/bandersnatch.conf' missing, creating default config.

2017-06-28 16:05:11,761 WARNING: Please review the config file, then run 'bandersnatch' again.

In this file you will specify the path into which you will download PyPI packages. I set my download path as follows:

[mirror]

; The directory where the mirror data will be stored.

directory = /MIRROR/pypi

By default, a logfile is specified, but bandersnatch will crash with the error ConfigParser.NoSectionError: No section: 'formatters' if the logfile does not exist. I simply commented out the line to fix this problem:

;log-config = /etc/bandersnatch-log.conf

You can see a sample bandersnatch.conf file at this pastebin:

http://paste.openstack.org/show/586323/

Start the mirroring process

You can start mirroring PyPI with the following command:

sudo bandersnatch mirror

As of Oct. 2016, PyPI takes up 380 GB on disk. Although mirroring requires just two steps, you are not done yet. You still have to make the packages available over your network and you must setup pip clients to use your local mirror.

Make the local mirror available over the network

Once you have mirrored pip packages, the next step is to setup an NFS share to the path where PyPI packages are mirrored or serve the mirror over HTTP using a webserver like Apache, nginx, darkhttpd, etc. I chose to go with darkhttpd because it doesn't require any setup whatsoever. You simply invoke darkhttpd in the path you wish to share over http. If you invoke it as sudo, it will serve files over port 80, but if you invoke it as the regular user, it will serve files over port 8080. You can specify the TCP port used with the --port option.

sudo darkhttpd /MIRROR

Now the Document Root for the webserver is /MIRROR under which the pypi folder is located. I generally place all my local mirrors (i.e. Ubuntu 16.04 repo, CentOS 7 repo, RHEL repo, etc) under the Document Root so that they are all available over http.

Assuming your local mirror machine has the IP address 10.10.10.5, if you navigate to http://10.10.10.5 in your browser (or with cURL) you should be able to see the pypi folder.

Customize pip clients to use local PyPI mirror

On client machines, create the directory ~/.pip and then create ~/.pip/pip.conf (if it doesn't exist already). Now add the following:

[global]

index-url = http://10.10.10.5/pypi/web/simple

trusted-host = 10.10.10.5

This of course assumes that clients are on the 10.10.10.x subnet and that your PyPI mirror is located on 10.10.10.5

In addition, the web server's document root should be /MIRROR as detailed in the previous section.

2016년 12월 10일 토요일

How to create a mirror of the entire npm index including attachments using npm-fullfat-registry fullfat.js

In a previous post I attempted to create a local npm mirror by simply caching all packages I installed using npm install pkgname. Unfortunately, this approach is very slow, downloading only about 100 MB per hour. Considering that all the packages in npm take up more than 1.2 TB, this speed won't do. The recommended method for creating a local npm mirror is to use the nosql couchdb. In this method, packages from npm are stored directly in couchdb.

Step One

Install couchdb from your distro's package manager or download from the Apache CouchDB page and build from source. As of Dec 10, 2016 version 1.6 is available from the Fedora 24 official repos while version 2.0+ is available from the Archlinux repos.

Once couchdb is installed, start the couchdb service. On Fedora 24+ and Archlinux, you can do this with:

sudo systemctl start couchdb

Step Two

Access Fauxton, the web GUI for couchdb by navigating to:

http://localhost:5984/_utils

Follow the prompts to create an admin user and password. If you don't create an admin user, anyone connecting to localhost:5984 will be able to create and delete databases. Also create a new database by clicking on the gear icon at the top-left. The screenshot below shows the Fauxton UI for couchdb 1.6:

Step Three

Make sure that npm (nodejs package manager) is installed and then create a new directory into which you will install the npm package npm-fullfat-registry. Then from that directory, run as local user:

npm install npm-fullfat-registry

You will then find a sub-directory named node_modules and below that npm-fullfat-registry/bin.

cd node_modules/npm-fullfat-registry/bin

In this sub-directory you will find a single file named fullfat.js

This is the program you need to execute in order to create a local npm mirror, assuming you have already installed couchdb and have created a DB for this program to write to.

fullfat.js takes the following arguments:

-f or --fat : the url to the couchdb database for storing packages

-s or --skim : the url to the npm package index

--seq-file : file which keeps track of the current package being downloaded from npm

--missing-log : file which stores the names and sequence numbers of packages that cannot be found

To save myself the hassle of entering these parameters every time I want to invoke fullfat.js, I created a convenience script in Bash:

#!/bin/bash

# fullfat.sh

# Last Updated: 2016-11-08

# Jun Go

# Invokes fullfat.js for creating a local npm mirror containing

# npm index as well as attachments. This script is intended to

# be launched by 'npm-fullfat-helper.sh'

LOCALDB=http://user:pw@localhost:5984/registry

SKIMDB=https://skimdb.npmjs.com/registry

./fullfat.js -f $LOCALDB -s $SKIMDB --seq-file=registry.seq \

--missing-log=missing.log

Of course you will need to edit the username and password for accessing Fauxton. The script above works, but it is not sufficient. fullfat.js crashes every so often so I created a monitor script to restart my fullfat.sh wrapper script whenever fullfat.js crashes. My monitor script is named npm-fullfat-helper.sh:

#!/bin/bash

# npm-fullfat-helper.sh

# Last Updated: 2016-11-08

# Jun Go

# During the mirroring process for npm, binary file attachments

# are saved into a local couchdb DB named 'registry', but sometimes

# downloading some packages fails or times out, which stops the

# entire process. If you manually resume with fullfat.js, you can

# start again where you left off. This script removes the need to

# do this manually.

until ./fullfat.sh; do

printf "%s\n" "fullfat.js crashed with exit code $?. Respawning" >&2

sleep 1

done

Using the script above, mirroring npm with fullfat.js becomes much more robust as it will be re-launched if the process returns anything other than exit code 0. But this is still not sufficient, because sometimes fullfat.js gets stuck while trying to download certain packages. No matter how many times it is restarted, certain packages (especially those with dozens of versions) never complete downloading, so you will be left with a lot of tar.gz files in temp directories but no final PUT command to couchdb. When this happens you have to manually edit the sequence file (which keeps track of which package is currently being downloaded). For example, if fullfat.js is stuck and registry.seq contains the number 864117, you must increment the number by 1 to 864117. Then if you relaunch the monitor script, fullfat.js should go on to the next package. If the package name is still unchanged, edit registry.seq once more by incrementing the new sequence by one.

Conclusion

Mirroring the npm index with file attachments using couchdb is much faster than simply caching packages installed through npm install foo. I get speeds of about 1 GB/hr. The problem is that manual intervention is required when fullfat.js gets stuck, i.e. you must manually change the sequence number stored in the sequence file (which I called registry.seq above) so that fullfat.js will skip a problematic package and go on to the next one. Another inconvenience is that as of Dec 12, 2016 the documentation for npm-fullfat-registry has not been updated. If you follow along with these old instructions, you will be told to invoke the following:

npm-fullfat-registry -f [url to local db] -s [url to npm package index]

But this won't work because the program you need to actually execute is fullfat.js. Simply replace npm-fullfat-registry above with fullfat.js and you'll be good to go. Keep in mind that the path to fullfat.js is node_modules/npm-fullfat-registry/bin in the directory into which you invoked npm install npm-fullfat-registry.

Step One

Install couchdb from your distro's package manager or download from the Apache CouchDB page and build from source. As of Dec 10, 2016 version 1.6 is available from the Fedora 24 official repos while version 2.0+ is available from the Archlinux repos.

Once couchdb is installed, start the couchdb service. On Fedora 24+ and Archlinux, you can do this with:

sudo systemctl start couchdb

Step Two

Access Fauxton, the web GUI for couchdb by navigating to:

http://localhost:5984/_utils

Follow the prompts to create an admin user and password. If you don't create an admin user, anyone connecting to localhost:5984 will be able to create and delete databases. Also create a new database by clicking on the gear icon at the top-left. The screenshot below shows the Fauxton UI for couchdb 1.6:

Make sure that npm (nodejs package manager) is installed and then create a new directory into which you will install the npm package npm-fullfat-registry. Then from that directory, run as local user:

npm install npm-fullfat-registry

You will then find a sub-directory named node_modules and below that npm-fullfat-registry/bin.

cd node_modules/npm-fullfat-registry/bin

In this sub-directory you will find a single file named fullfat.js

This is the program you need to execute in order to create a local npm mirror, assuming you have already installed couchdb and have created a DB for this program to write to.

fullfat.js takes the following arguments:

-f or --fat : the url to the couchdb database for storing packages

-s or --skim : the url to the npm package index

--seq-file : file which keeps track of the current package being downloaded from npm

--missing-log : file which stores the names and sequence numbers of packages that cannot be found

To save myself the hassle of entering these parameters every time I want to invoke fullfat.js, I created a convenience script in Bash:

#!/bin/bash

# fullfat.sh

# Last Updated: 2016-11-08

# Jun Go

# Invokes fullfat.js for creating a local npm mirror containing

# npm index as well as attachments. This script is intended to

# be launched by 'npm-fullfat-helper.sh'

LOCALDB=http://user:pw@localhost:5984/registry

SKIMDB=https://skimdb.npmjs.com/registry

./fullfat.js -f $LOCALDB -s $SKIMDB --seq-file=registry.seq \

--missing-log=missing.log

Of course you will need to edit the username and password for accessing Fauxton. The script above works, but it is not sufficient. fullfat.js crashes every so often so I created a monitor script to restart my fullfat.sh wrapper script whenever fullfat.js crashes. My monitor script is named npm-fullfat-helper.sh:

#!/bin/bash

# npm-fullfat-helper.sh

# Last Updated: 2016-11-08

# Jun Go

# During the mirroring process for npm, binary file attachments

# are saved into a local couchdb DB named 'registry', but sometimes

# downloading some packages fails or times out, which stops the

# entire process. If you manually resume with fullfat.js, you can

# start again where you left off. This script removes the need to

# do this manually.

until ./fullfat.sh; do

printf "%s\n" "fullfat.js crashed with exit code $?. Respawning" >&2

sleep 1

done

Using the script above, mirroring npm with fullfat.js becomes much more robust as it will be re-launched if the process returns anything other than exit code 0. But this is still not sufficient, because sometimes fullfat.js gets stuck while trying to download certain packages. No matter how many times it is restarted, certain packages (especially those with dozens of versions) never complete downloading, so you will be left with a lot of tar.gz files in temp directories but no final PUT command to couchdb. When this happens you have to manually edit the sequence file (which keeps track of which package is currently being downloaded). For example, if fullfat.js is stuck and registry.seq contains the number 864117, you must increment the number by 1 to 864117. Then if you relaunch the monitor script, fullfat.js should go on to the next package. If the package name is still unchanged, edit registry.seq once more by incrementing the new sequence by one.

Conclusion

Mirroring the npm index with file attachments using couchdb is much faster than simply caching packages installed through npm install foo. I get speeds of about 1 GB/hr. The problem is that manual intervention is required when fullfat.js gets stuck, i.e. you must manually change the sequence number stored in the sequence file (which I called registry.seq above) so that fullfat.js will skip a problematic package and go on to the next one. Another inconvenience is that as of Dec 12, 2016 the documentation for npm-fullfat-registry has not been updated. If you follow along with these old instructions, you will be told to invoke the following:

npm-fullfat-registry -f [url to local db] -s [url to npm package index]

But this won't work because the program you need to actually execute is fullfat.js. Simply replace npm-fullfat-registry above with fullfat.js and you'll be good to go. Keep in mind that the path to fullfat.js is node_modules/npm-fullfat-registry/bin in the directory into which you invoked npm install npm-fullfat-registry.

2016년 12월 1일 목요일

Using an external monitor with bumblebee and intel-virtual-output

I have an ASUS U36JC which uses both Intel integrated video and an Nvidia GeForce 310M GPU. On Windows, Nvidia has a solution called Optimus that will automatically switch between the Intel and Nvidia GPU's depending on the computing task. For example, when you are editing a document, the notebook will use the low-power integrated Intel GPU and when you are watching a movie or doing video editing, the notebook will use the power-hungry Nvidia GPU.

On Linux, however, this GPU-switching does not happen automatically. There are several different ways you can enable Nvidia GPU's on Linux, but I chose bumblebee-nvidia with the proprietary Nvidia driver as I explain in a previous post. To launch regular applications using the Nvidia GPU, you must launch your program by prefixing it with 'optirun', i.e.:

optirun [program name]

But in order to use multiple monitors on an Optimus-enabled notebook like the ASUS U36JC, you can't just invoke something like optirun startx. You need to edit a few Bumblebee X11 settings and make sure that bumblebeed (bumblebee daemon) is running to use the HDMI external monitor port (which is hard-wired to the discrete Nvidia GPU. The VGA port on the ASUS U36JC uses Intel integrated graphics, however, so bumblee is not required)

The required steps to use an external monitor through the HDMI port on Fedora 24 are as follows:

1. Make sure you have already installed and configured bumblebee.

2. sudo dnf install intel-gpu-tools (this pkg contains intel-virtual-output)

3. In /etc/bumblebee/xorg.conf.nvidia make the following changes:

4. Start bumblebee daemon: sudo systemctl start bumblebeed

5. Launch intel-virtual-output (the default is to launch as a daemon, but I will launch in foreground mode so I can easily kill the process with Ctrl-C when I am done using an external monitor). You can run this program as the local user (When executing as root, it doesn't work).

# intel-virtual-output -f

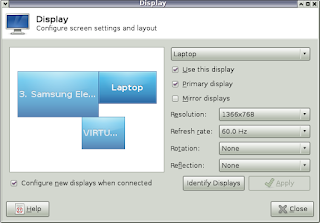

Now X windows should be able to detect an external monitor connected to the video ports wired to the Nvidia GPU. Running xfce4-display-settings shows the following:

The monitor named "VIRTUAL" is a path-through interface created by intel-virtual-output.

Arandr, the front-end to the Xorg screen layout/orientation tool xrandr, also detects multiple screens:

References:

https://wiki.archlinux.org/index.php/bumblebee#Output_wired_to_the_NVIDIA_chip

https://bugzilla.redhat.com/show_bug.cgi?id=1195962

On Linux, however, this GPU-switching does not happen automatically. There are several different ways you can enable Nvidia GPU's on Linux, but I chose bumblebee-nvidia with the proprietary Nvidia driver as I explain in a previous post. To launch regular applications using the Nvidia GPU, you must launch your program by prefixing it with 'optirun', i.e.:

optirun [program name]

But in order to use multiple monitors on an Optimus-enabled notebook like the ASUS U36JC, you can't just invoke something like optirun startx. You need to edit a few Bumblebee X11 settings and make sure that bumblebeed (bumblebee daemon) is running to use the HDMI external monitor port (which is hard-wired to the discrete Nvidia GPU. The VGA port on the ASUS U36JC uses Intel integrated graphics, however, so bumblee is not required)

The required steps to use an external monitor through the HDMI port on Fedora 24 are as follows:

1. Make sure you have already installed and configured bumblebee.

2. sudo dnf install intel-gpu-tools (this pkg contains intel-virtual-output)

3. In /etc/bumblebee/xorg.conf.nvidia make the following changes:

- Option "AutoAddDevices" "true"

- Option "UseEDID" "true"

- # Option "UseDisplayDevice" "none"

You can see my edited xorg.conf.nvidia file here:

4. Start bumblebee daemon: sudo systemctl start bumblebeed

5. Launch intel-virtual-output (the default is to launch as a daemon, but I will launch in foreground mode so I can easily kill the process with Ctrl-C when I am done using an external monitor). You can run this program as the local user (When executing as root, it doesn't work).

# intel-virtual-output -f

Now X windows should be able to detect an external monitor connected to the video ports wired to the Nvidia GPU. Running xfce4-display-settings shows the following:

The monitor named "VIRTUAL" is a path-through interface created by intel-virtual-output.

Arandr, the front-end to the Xorg screen layout/orientation tool xrandr, also detects multiple screens:

References:

https://wiki.archlinux.org/index.php/bumblebee#Output_wired_to_the_NVIDIA_chip

https://bugzilla.redhat.com/show_bug.cgi?id=1195962

피드 구독하기:

덧글 (Atom)